Introduction

vlayer enables developers to extract, verify and integrate real-world data into Ethereum smart contracts. Our technology is powered by Zero Knowledge Proofs (ZKP) and Multi-Party Computation (MPC), allowing you to securely verify private data without exposing sensitive information.

Our four core features include:

- Web Proofs: Access verified web data, including APIs and websites, in your smart contracts

- Email Proofs: Tap into email content from your smart contracts and use it on-chain

- Time Travel: Leverage historical on-chain data in your smart contracts

- Teleport: Execute a smart contract across different EVM-compatible blockchain networks

vlayer allows smart contracts to be executed off-chain. The result of the execution can then be used by on-chain contracts.

What are some real-world applications of vlayer?

vlayer offers diverse applications across industries that demand secure and privacy-preserving data verification. By enabling users to prove specific facts without revealing underlying personal information, vlayer empowers businesses to build trust without the need to access or store sensitive data like names, birthdates, government IDs, or financial information.

A few real-world applications of vlayer include:

- Use Web Proofs to verify social media engagement for brand partnerships or to generate verifiable proof of asset custody in institutional holdings

- Use Email Proofs to reset account abstraction wallets

- Use Time Travel to verify historical ETH, ERC-721, or ERC-20 balances for airdrops, voting rights, or other on-chain entitlements

- Use proof of holdings on another blockchain as collateral for loans via Teleport

Additional use cases can be found here.

Sections

Getting Started

To get started with vlayer, install vlayer, set up your first project and check out the explainer section to learn how vlayer works. Finally take a look into devnet, testnet & mainnet to learn about vlayer environments.

Features

See how to time travel across block numbers, teleport from one chain to another, prove data coming from email or web and use helpers for JSON and Regex.

From JavaScript

Learn how to interact with vlayer from your JS code and how to generate web proofs and email proofs using our SDK.

Advanced

Learn in-depth how:

- Prover and Verifier contracts are working.

- Global Variables are set.

- Tests are run.

Installation

The easiest way to install vlayer is by using vlayerup, the vlayer toolchain installer.

Supported Platforms

Linux: Only Ubuntu 24.04 LTS or newer versions with x86_64 CPU architecture are supported. Other Linux distributions may work but are not officially supported.

Mac: Macs with Intel CPUs are not supported. Use a Mac with Apple Silicon.

Prerequisites

Before working with vlayer, ensure the following tools are installed:

Additionally, you'll need Bun to run examples. For more details, refer to the Running Examples Locally section.

Get vlayerup

To install vlayerup, run the following command in your terminal, then follow the onscreen instructions.

curl -SL https://install.vlayer.xyz | bash

This will install vlayerup and make it available in your CLI.

Occasionally, vlayerup might require an upgrade. To update it, simply run the same command again.

Using vlayerup

Running vlayerup will install the latest (nightly) precompiled binary of vlayer:

vlayerup

You can check that the binary has been successfully installed and inspect its version by running:

vlayer --version

Installing nightly version

You can use use the following command to install a nightly version of vlayer:

vlayerup --channel nightly

Using vlayer test

In order to execute vlayer test with vlayer examples, vlayer requires risc0-ethereum version 3.0 or higher. Using an older version (< 3.0) will result in proof verification failures due to incompatible control roots and verifier parameters.

To ensure you have the most up-to-date version of the RISC Zero toolchain (cargo-risczero):

cargo install cargo-risczero --force

This installs or updates cargo-risczero to the latest version.

You can check that it has been successfully installed and inspect its version by running:

cargo risczero --version

First steps with vlayer

Creating a new project

Run this command to initialize a new vlayer project:

vlayer init project-name

It creates a folder with sample contracts.

Adding to an existing project

Use the --existing flag to initialize vlayer within your existing Foundry project:

cd ./your-project && vlayer init --existing

Example project

To initialize a vlayer project with example prover and verifier contracts, use the --template flag as shown below:

vlayer init simple --template simple

The following templates are available for quick project setup:

simple: Prove an ERC20 token balance at a specific block number.simple-email-proof: Mint an NFT to the owner of an email address from a specific domain.simple-teleport: Prove a cross-chain ERC20 token balance.simple-time-travel: Prove the average ERC20 token balance across multiple block numbers.simple-web-proof: Mint an NFT to the owner of a specific X/Twitter handle using Web Proofs.kraken-web-proof: Generate server-side web proof of ETH to USD exchange rate and store it on-chain

Directory structure

The vlayer directory structure resembles a typical Foundry project but with two additional folders: src/vlayer and vlayer.

src/vlayer: Contains the Prover and Verifier smart contracts.vlayer: Has contract deployment scripts, client SDK calls to the prover, and verifier transactions.

Running examples

❗️ Make sure that you have Bun installed in your system to build and run the examples.

First off, build the contracts by navigating to your project folder and running:

cd your-project

forge build

This compiles the smart contracts and prepares them for deployment and testing.

Please note that

vlayer initinstalls Solidity dependencies and generatesremappings.txt. Runningforge soldeer installis not needed to build the example and may overwrite remappings, which can cause build errors.

Then, install Typescript dependencies in vlayer folder by running:

cd vlayer

bun install

Testnet

In order to use the testnet, you will need to provide a couple of secrets.

Firstly, create vlayer/.env.testnet.local - this is where you will put all your secret keys in.

Sign up or Log in to dashboard.vlayer.xyz and generate a new testnet Json Web Token (JWT).

Copy generated token (it won't be visible ever again) and save it in vlayer/.env.testnet.local

VLAYER_API_TOKEN=...

❗️ It is important to note that the JWT token is valid for 1 year after which you will need to generate a new token to continue developing using vlayer.

Next provide a private key for deploying example contracts and sending transactions to the verifier in the vlayer/.env.testnet.local file as

EXAMPLES_TEST_PRIVATE_KEY=0x....

By default, optimismSepolia is configured in the vlayer/.env.testnet file. However, you can override this setting to use other testnets.

To change the desired network, set the CHAIN_NAME and JSON_RPC_URL environment variables in vlayer/.env.testnet.local.

Once configured, run the example from within the vlayer directory using:

bun run prove:testnet

Local devnet

Running examples on a local devnet requires deploying a local instance of the prover and anvil. If you want to run on local environment, use Docker:

$ bun run devnet:up

This command will start all required services in the background.

Once the devnet is up, run the example from within the vlayer directory:

bun run prove:dev

Production

In order to use the production, you will need to provide a couple of secrets.

Firstly, create vlayer/.env.mainnet.local - this is where you will put all your secret keys in.

Sign up or Log in to dashboard.vlayer.xyz and generate a new production Json Web Token (JWT).

Copy generated token (it won't be visible ever again) and save it in vlayer/.env.mainnet.local

VLAYER_API_TOKEN=...

Next provide a private key for deploying example contracts and sending transactions to the verifier in the vlayer/.env.mainnet.local file as

EXAMPLES_TEST_PRIVATE_KEY=0x....

By default, optimism is configured in the vlayer/.env.mainnet file. However, you can override this setting to use other chains.

To change the desired network, set the CHAIN_NAME and JSON_RPC_URL environment variables in vlayer/.env.mainnet.local.

Once configured, run the example from within the vlayer directory using:

bun run prove:mainnet

Web Proof example

First, install the vlayer browser extension from the Chrome Web Store (works with Chrome and Brave browsers). For more details about the extension, see the Web Proofs section.

Start web app on localhost:

cd vlayer

bun run web:dev

You can manually deploy WebProofProver and WebProofVerifier as well:

cd vlayer

bun run deploy:dev # deploy to local anvil

bun run deploy:testnet # deploy to testnet

❗️ Ensure the contracts are recompiled after every change with

forge build

The app will be available at http://localhost:5174 and will display buttons that will let you interact with the extension and vlayer server (open browser developer console to see the app activity).

How it works?

vlayer introduces new super powers to Solidity smart contracts:

- Time Travel: Execute a smart contract on historical data.

- Teleport: Execute a smart contract across different blockchain networks.

- Web proof: Access verified web content, including APIs and websites.

- Email proof: Access verified email content.

Prover and Verifier

To implement the above features, vlayer introduces two new contract types: Prover and Verifier.

The Prover code runs on the vlayer zkEVM infrastructure. Proof data structure is the result of this operation.

The Verifier verifies generated proof and runs your code on EVM-compatible chains.

Both types of contracts are developed using the Solidity programming language.

vlayer contract execution

A typical vlayer execution flow has three steps:

- The application initiates a call to the Prover contract that is executed off-chain in the zkEVM. All the input for this call is private by default and is not published on-chain.

- The result of the computation is passed along with a proof to be executed in the on-chain contract. All the output returned from Prover contract is public and is published on-chain as parameters to the Verifier contract.

- The Verifier contract verifies the data sent by the proving party (using the submitted proof by client) and then executes the Verifier code.

See the diagram below.

The flow of vlayer contract execution

The flow of vlayer contract execution

Prover

vlayer Prover contracts have a few distinct properties:

- verifiability - can be executed off-chain and results can't be forged.

- privacy - inputs are private by default and are not published on-chain.

- no gas fees - no usual transaction size limits apply.

All arguments passed to the Prover contract functions are private by default. To make an argument public, simply add it to the list of returned values.

See the example Prover contract code below. It generates proof of ownership of the BYAC (Bored Ape Yacht Club) NFT.

contract BoredApeOwnership is Prover {

function main(address _owner, uint256 _apeId) public returns (Proof, address) {

// jumps to block 12292922 at ETH mainnet (chainId=1), when BYAC were minted

setChainId(1, 12292922);

require(IERC721(BYAC_NFT_ADDR).ownerOf(_apeId) == _owner, "Given address not owning that BYAC");

return (proof(), _owner);

}

}

In order to access Prover specific features, your contract needs to derive from the vlayer Prover contract. Then setChainId() teleport context to a historic block at Ethereum Mainnet (chainId=1) in which the first mint of BYAC NFT occurred. require makes sure that the given address (_owner) was the owner of the specific _apeId at that point of time. The owner address, which makes it public input for the Verifier contract.

Verifier

The Verifier smart contract validates the correctness of a computation generated by Prover, without revealing the underlying information. Such contracts can be used to facilitate more complex workflows, such as privacy-preserving decentralized finance (DeFi) applications or confidential voting systems.

Verification logic is immutable once deployed on the blockchain, ensuring consistent and permissionless access.

See the example Verifier contract below. It transfers tokens to proven owner of certain NFT:

contract Airdrop is Verifier {

function claim(Proof calldata _p, address owner)

public

onlyVerified(PROVER_VLAYER_CONTRACT_ADDR, NftOwnership.main.selector)

{

IERC20(TOKEN_ADDR).transfer(owner, 1000);

}

}

Note that the above contract inherits from the Verifier vlayer contract.

It is necessary for verifying the computation done by the Prover contract from the previous step.

claim() function takes proof returned by the vlayer SDK as the first argument. Other arguments are public inputs returned from Prover main() function (in the same order).

onlyVerified(address, bytes4) modifier ensures that proof is valid and takes two arguments:

- Address of the Prover contract

- Function selector of the Prover main function

Proof doesn't have to be passed to onlyVerified as an argument. However, it has to be passed as an argument to function that is being decorated with onlyVerified, along with the public outputs.

To learn more about how the Prover and Verifier work under the hood, please refer to our Advanced section.

Devnet, Testnet & Mainnet

The vlayer network consists of several types of nodes: provers, indexers, notaries, and proxies. These nodes are essential for executing vlayer smart contract features, including Time Travel, Teleport, and proofs for Email and Web.

Currently, vlayer supports the following environments:

- testnet: public environment supporting multiple L1 and L2 testnets.

- devnet: local environment that runs with Docker Compose, providing all necessary services for development.

- mainnet: main public network supporting Ethereum Mainnet, Base, Optimism, and Arbitrum.

Testnet

By default, vlayer CLI, SDK, and example apps use the testnet environment, with no additional configuration required.

The Test Prover operates in FAKE mode and works with the following testnets:

| chain | time travel | teleport | email/web |

|---|---|---|---|

| baseSepolia | 🚧 | ✅ | ✅ |

| sepolia | 🚧 | ✅ | ✅ |

| optimismSepolia | ✅ | ✅ | ✅ |

| polygonAmoy | ✅ | ||

| arbitrumSepolia | ✅ | ||

| lineaSepolia | ✅ | ||

| worldchainSepolia | ✅ | ||

| zksyncSepoliaTestnet | ✅ |

✅ Supported, 🚧 In progress

Public Testnet Services

| Service | Endpoint | Description |

|---|---|---|

| Prover | https://stable-fake-prover.vlayer.xyz | zkEVM prover for vlayer contracts |

| Nightly Prover | https://nightly-fake-prover.vlayer.xyz | Nightly version of the zkEVM prover |

| Indexer | https://stable-fake-chainservice.vlayer.xyz | Storage proof indexer |

| Nightly Indexer | https://nightly-fake-chainservice.vlayer.xyz | Nightly version of the indexer |

| Notary | https://test-notary.vlayer.xyz | TLS Notary server |

| WebSocket Proxy | wss://test-wsproxy.vlayer.xyz | Proxying websocket connections for TLS Notary |

Devnet

Devnet allows you to run the full stack locally, including anvil and all required vlayer nodes.

Starting Devnet

Prerequisites

From the vlayer Project

Navigate to the vlayer project directory and start services in the background:

cd ${project}/vlayer

bun run devnet:up

You can stop devnet anytime with:

cd ${project}/vlayer

bun run devnet:down

It is useful in case of any Docker configuration change.

Available Services

| Service | Endpoint | Description |

|---|---|---|

| Anvil-A | http://127.0.0.1:8545 | Local devnet |

| Anvil-B | http://127.0.0.1:8546 | Secondary devnet (for time travel/teleport testing) |

| Anvil-C | http://127.0.0.1:8547 | Tertiary devnet (for time travel/teleport testing) |

| Prover | http://127.0.0.1:3000 | zkEVM prover for vlayer contracts |

| Indexer | http://127.0.0.1:3001 | Storage proof indexer |

| Notary | http://127.0.0.1:7047 | TLS Notary server |

| WebSocket Proxy | ws://127.0.0.1:3003 | Proxying websocket connections |

Clearing Cache

Cached proofs for time travel and teleport are stored in ./chain_db and can be deleted manually:

rm -rf ./chain_db

Mainnet

The Mainnet Prover operates in GROTH16 mode and works with the following chains:

| chain | time travel | teleport | email/web |

|---|---|---|---|

| mainnet | ✅ | ✅ | ✅ |

| base | ✅ | ✅ | ✅ |

| optimism | ✅ | ✅ | ✅ |

✅ Supported, 🚧 In progress

Public Mainnet Services

| Service | Endpoint | Description |

|---|---|---|

| Prover | https://stable-prod-prover.vlayer.xyz | zkEVM prover for vlayer contracts |

| Indexer | https://chainservice.vlayer.xyz | Storage proof indexer |

| Notary | https://notary.vlayer.xyz | TLS Notary server |

| WebSocket Proxy | wss://wsproxy.vlayer.xyz | Proxying websocket connections for TLS Notary |

Prover Modes

The prover server supports two proving modes:

- FAKE: Designed for development and testing purposes, this mode executes code and verifies its correctness without performing actual proving. While the Verifier contract can confirm computations in this mode, it is vulnerable to exploitation by a malicious Prover.

- GROTH16: Intended for production and final testing, this mode performs real proving.

FAKE Mode

Testnet and devnet provers run in FAKE mode by default.

Note: FAKE mode is limited to dev and test chains to prevent accidental errors.

GROTH16 Mode

GROTH16 mode is slower than FAKE mode and requires significant computational resources.

To speed up proof generation, vlayer supports the use of infrastructure like Bonsai (and eventually Boundless) to offload heavy computations to high-performance machines.

To run a prover node in production mode, download and modify call_server/config.toml:

host = "0.0.0.0"

port = 3000

proof_mode = "groth16"

# the rest of the config.toml file...

and call_server/service.yaml:

vlayer-call-server:

# existing vlayer config

environment:

# other env variables...

BONSAI_API_URL: https://api.bonsai.xyz

BONSAI_API_KEY: api_key_goes_here

command: "--config-file /config.toml"

You can request a BONSAI_API_KEY here.

Note: Protocols should be designed with proving execution times in mind, as generating a proof may take several minutes.

Web

Existing web applications including finance, social media, government, ecommerce and many other types of services contain valuable information and can be turned into great data sources.

With vlayer, you can leverage this data in smart contracts.

Web Proofs

Web Proofs provide cryptographic proof of web data served by any HTTPS server, allowing developers to use this data in smart contracts. Only a small subset of the required data is published on-chain.

Web Proofs ensure that the data received has not been tampered with. Without Web Proofs, proving this on-chain is difficult, especially when aiming for an automated and trusted solution.

Example Prover

Let's say we want to mint an NFT for a wallet address linked to a specific X/Twitter handle.

Here’s a sample Prover contract:

import {Strings} from "@openzeppelin-contracts/utils/Strings.sol";

import {Proof} from "vlayer-0.1.0/Proof.sol";

import {Prover} from "vlayer-0.1.0/Prover.sol";

import {Web, WebProof, WebProofLib, WebLib} from "vlayer-0.1.0/WebProof.sol";

contract WebProofProver is Prover {

using Strings for string;

using WebProofLib for WebProof;

using WebLib for Web;

string dataUrl = "https://api.x.com/1.1/account/settings.json";

function main(WebProof calldata webProof, address account)

public

view

returns (Proof memory, string memory, address)

{

Web memory web = webProof.verify(dataUrl);

string memory screenName = web.jsonGetString("screen_name");

return (proof(), screenName, account);

}

}

What happens in the above code?

-

Setup the

Provercontract:WebProofProverinherits from theProvercontract, enabling off-chain proving of web data.- The

mainfunction receives aWebProof, which contains a signed transcript of an HTTPS session (see the chapter from JS section on how to obtainWebProof). The transcript is signed by a Notary (see Security Considerations section for details about the TLS Notary).

-

Verify the Web Proof:

The call to

webProof.verify(dataUrl)does the following:- Verifies the HTTPS transcript.

- Verifies the Notary's signature on the transcript.

- Ensures the Notary is on the list of trusted notaries (via their signing key).

- Confirms the data comes from the expected domain (

api.x.comin this case). - Check whether the HTTPS data comes from the expected

dataUrl.dataUrlis a URL Pattern against which the actual URL is checked. - Ensures that the server's SSL certificate and its chain of authority are verified.

- Retrieves the plain text transcript for further processing.

-

Extract the relevant data:

web.jsonGetString("screen_name")extracts thescreen_namefrom the JSON response. -

Return the results:

If everything checks out, the function returns the

proofplaceholder,screenName, and theaccount.

If there are no errors and the proof is valid, the data is ready for on-chain verification.

Obtaining Web Proofs

vlayer provides two ways to obtain Web Proofs:

- Client-side (via browser extension)

- Server-side (via the vlayer CLI)

Client-side

The client-side method is intended for scenarios where the target data is authenticated using browser cookies (e.g., logged-in user sessions). It uses the vlayer browser extension to capture and notarize HTTP requests directly from the user’s browsing context.

This approach is ideal for proving access to social media activity, personal or banking data, Web2 loyalty points, reputation scores, or any other cookie-protected content.

💡 Try it Now

To run an example that proves ownership of an X/Twitter handle on your computer, enter the following command in your terminal:

vlayer init --template simple-web-proofThis command will download all the necessary artifacts for your project.

The next steps are explained in the Running Examples and Quickstart Guide.

Server-side

The server-side method is intended for proving data retrieved from HTTP requests that are either public or authenticated via token. It’s a great fit for APIs such as AI models, fintech services, or any backend integration where browser cookie is not required.

💡 Try it Now

To run an example that proves data returned by the Kraken API, enter the following command in your terminal:

vlayer init --template kraken-web-proofThis will download all necessary artifacts to your project.

The next steps are detailed in Running Examples.

Example Verifier

The contract below verifies provided Web Proof and mints a unique NFT for the Twitter/X handle owner’s wallet address.

import {WebProofProver} from "./WebProofProver.sol";

import {Proof} from "vlayer/Proof.sol";

import {Verifier} from "vlayer/Verifier.sol";

import {ERC721} from "@openzeppelin-contracts/token/ERC721/ERC721.sol";

contract WebProofVerifier is Verifier, ERC721 {

address public prover;

constructor(address _prover) ERC721("TwitterNFT", "TNFT") {

prover = _prover;

}

function verify(Proof calldata, string memory username, address account)

public

onlyVerified(prover, WebProofProver.main.selector)

{

uint256 tokenId = uint256(keccak256(abi.encodePacked(username)));

require(_ownerOf(tokenId) == address(0), "User has already minted a TwitterNFT");

_safeMint(account, tokenId);

}

}

What’s happening here?

-

Set up the

Verifier:- The

provervariable stores the address of theProvercontract that generated the proof. - The

WebProofProver.main.selectorgets the selector for theWebProofProver.main()function. WebProofVerifierinherits fromVerifierto access theonlyVerifiedmodifier, which ensures the proof is valid.WebProofVerifieralso inherits fromERC721to support NFTs.

- The

-

Verification checks:

The

tokenId(a hash of the handle) must not already be minted. -

Mint the NFT:

Once verified, a unique

TwitterNFTis minted for the user.

And that's it!

As you can see, Web Proofs can be a powerful tool for building decentralized applications by allowing trusted off-chain data to interact with smart contracts.

Notary

A Notary is a third-party server that participates in a two-sided Transport Layer Security (TLS) session between a client and a server. Its role is to attest that specific communication has occurred between the two parties.

Security Considerations

The Web Proof feature is based on the TLSNotary protocol. Web data is retrieved from an HTTP endpoint and it's integrity and authenticity during the HTTP session is verified using the TLS protocol (the "S" in HTTPS), which secures most modern encrypted connections on the Internet. Web Proofs ensure the integrity and authenticity of web data after the HTTPS session finishes by extending the TLS protocol. Notary, joins the HTTPS session between the client and the server and can cryptographically certify its contents.

From privacy perspective, it is important to note that the Notary server never has access to the plaintext transcript of the connection and therefore, Notary can never steal client data and pretend to be client. Furthermore, the transcript can be redacted (i.e. certain parts can be removed) by the client, making these parts of the communication not accessible by Prover and vlayer infrastructure running the Prover.

Redaction

Trust Assumptions

It is important to understand that the Notary is a trusted party in the above setup. Since the Notary certifies the data, a malicious Notary could collude with a malicious client to create fake proofs that would still be successfully verified by Prover. Currently vlayer runs it's own Notary server, which means that vlayer needs to be trusted to certify HTTPS sessions.

Currently vlayer also needs to be trusted when passing additional data (data other than the Web Proof itself) to Prover smart contract, e.g. account in the example above. The Web Proof could be hijacked before running Prover and additional data, different from the original, could be passed to Prover, e.g. an attacker could pass their own address as account in our WebProofProver example. Before going to production this will be addressed by making the setup trustless through an association of the additional data with a particular Web Proof in a way that's impossible to forge.

vlayer will publish a roadmap outlining how it will achieve a high level of security when using the Notary service.

Custom data source - quick guide

This guide walks you through setting up a development environment, configuring a custom data source, and generating/verifying Web Proofs using vlayer.

Setting up dev environment

Initialize new project using simple-web-proof template:

vlayer init my-web-proof --template simple-web-proof

Enter newly created project:

cd my-web-proof

Build contracts:

forge build

This step must be repeated whenever you modify the prover or verifier contract code.

Navigate to the /vlayer directory and install JavaScript dependencies:

cd vlayer

bun install

Start local devnet:

bun run devnet:up

In a separate terminal, run the example web application:

bun run web:dev

Configuring data source

Once the application is running, you can customize it for your chosen data source. We recommend first obtaining a web proof in your browser.

To configure a new data source, open vlayer/src/hooks/useTwitterAccountProof.ts and examine the steps[] attribute in the webProofConfig object.

Example logic is wrapped within a React Hook, but you can achieve the same thing in vanilla JS.

Now you can set up a new data source:

// specify starting page where extension redirects your user

startPage("https://x.com/", "Go to start page"),

// in case of authentication/redirect go to specific page

expectUrl("https://x.com/home", "Log in"),

// Specify which HTTP endpoint made by the browser from the visited website contains the data to be proven.

// This is typically an asynchronous request — you can inspect it using the Network tab in your browser's developer tools.

notarize(

"https://api.x.com/1.1/account/settings.json",

"GET",

"Generate Proof of Twitter profile",

[

{

request: {

// redact all the headers

headers_except: [],

},

},

{

response: {

// response from api.x.com sometimes comes with Transfer-Encoding: Chunked

// which needs to be recognised by Prover and cannot be redacted

headers_except: ["Transfer-Encoding"],

},

},

],

),

More detailed description on JS SDK is available in Javascript > Web Proofs section.

If you picked any other data source than api.x.com you would need to regenerate your local API token that sits in vlayer/.env.testnet.

To configure https://api.example.com, run the following command:

vlayer jwt encode -p ./fixtures/jwt-authority.key --subject deadbeef --host "api.example.com" --post 443

Explanation of flags:

-p ./fixtures/jwt-authority.key— Uses a sample private key that generates a valid JWT for the local devnet. Do not modify.--subject deadbeef— Arbitrary identifier for local devnet use. Do not modify.

Obtaining Web Proof

Now you can navigate through example app in your browser (by default running on http://localhost:5137) and check if Chrome browser extension correctly redirects your user to data source. Extension supports Chrome and Brave browsers only.

Finding the correct data source HTTP endpoint can be tricky. We recommend using the Network tab in your browser’s Developer Console. To narrow down the results, filter requests by Fetch/XHR, which helps isolate relevant API calls from the rest.

Finding the correct data source HTTP endpoint

A correctly generated web proof is stored in your browser's localStorage under the webProof key. To inspect localStorage:

- Open Developer Console (F12 or right-click > Inspect).

- Navigate to the "Application" tab.

- In the sidebar, find "Local Storage" and select your site's domain.

- Look for the key

webProof.

Generating ZK proof in the prover

After obtaining the Web Proof via the browser extension, it must be sent to the vlayer prover contract.

That is performed by callProver() function in vlayer/src/components/organisms/ProveStep/Container.tsx.

Through vlayer sdk proof is injected into prover contract: src/vlayer/WebProofProver.sol. Make sure that proper URL is checked there:

Web memory web = webProof.verify("https://api.x.com/1.1/account/settings.json");

Do not forget about building contracts and deploying them after any change in their code:

forge build

cd vlayer

bun run deploy:dev

Verifying on-chain

Once ZK proof is returned from prover it can be used for on-chain verification. Proof along with public inputs has to be passed to WebProofVerifier.sol using a write call:

const writeContractArgs: Parameters<typeof writeContract>[0] = {

address: import.meta.env.VITE_VERIFIER_ADDRESS as `0x${string}`, // Verifier contract address

abi: webProofProofVerifier.abi, // ABI for the verifier contract

functionName: "verify", // Function to call for verification

args: proofData, // ZK proof data to verify (proof + public input)

};

Whole logic for this step is available in handleMint() in vlayer/src/components/organisms/MintStep/Container.tsx file.

Common Issues / FAQ

Are there any limitations on the data that can be verified?

Currently, we only support JSON payloads. The maximum payload size per request and response is 10 KB.

Can I prove GraphQL responses?

No, this is not supported at the moment.

How can I debug extension errors?

- Right-click on the extension sidebar window.

- Select Inspect.

- Go to the Console tab.

Note: The extension console is separate from your webapp console.

Can I make assertions about JSON attributes?

Yes, assertions must be implemented in the Prover code. You can find more details here.

Server-side proving

vlayer supports generating Web Proofs on the server side. This method is especially useful for proving web data returned by public or token-authenticated APIs, without requiring a browser extension.

Typical use cases include verifying responses from centralized services such as Coinbase, Binance, Kraken, Stripe, Github, Meta, YouTube or similar platforms.

To notarize HTTP requests from the server, use the vlayer CLI:

vlayer web-proof-fetch [OPTIONS] --url <URL>

Available options:

--url <URL> Full URL of the request to notarize

--host <HOST> Optional host address, if different from the domain provided in URL

--notary <NOTARY_URL> Notary URL [default: https://test-notary.vlayer.xyz/]

-H, --headers <HEADER> Additional headers (format: "Header-Name: Header-Value")

-d, --data <DATA> HTTP data to be sent with the request

-h, --help Print help

-V, --version Print version

Example usage:

vlayer web-proof-fetch --url "https://api.kraken.com/0/public/Ticker?pair=ETHUSD"

Such produced Web Proof (including url, headers and body) can be passed into vlayer prover and then verified on-chain.

💡 Try it Now

To run an example that proves data returned by the Kraken API, enter the following command in your terminal:

vlayer init --template kraken-web-proofThis will download all necessary artifacts to your project.

The next steps are detailed in Running Examples.

For proving HTTP requests that rely on httpOnly cookies, use the client-side approach.

Redaction

What is Redaction?

Redaction feature lets you hide sensitive portions of an HTTPS transcript from the Prover. Common use cases include removing cookies, authorization headers, or API tokens before generating a Web Proof. At the same time, everything you leave visible is still cryptographically verified for integrity.

⚠️ Warning! Unsafe byte-range redaction can introduce ambiguities and vulnerabilities. This guide explains how to use redaction safely.

To learn how to enable and configure redaction using the vlayer SDK, see the Redaction section in our JavaScript documentation.

Currently supported redaction targets:

- URL path

- Request headers

- Response headers

Note: Redaction of the response body is currently supported only in unsafe mode, which demands special security concerns. More details will be provided in a future book update.

Security Model

Why Caution Is Needed?

In TLSN Protocol, the foundation for Web Proofs, redaction is performed over raw byte ranges. This means the transcript is treated as an unstructured byte stream, without awareness of HTTP headers, query parameters, or other protocol elements.

For example, this TLSN function redacts bytes from 2 to 4.

redact(2, 4)

Let’s take a closer look at security nuances of specific cases.

URL Redaction

Consider the following redacted URL path (* stands for redacted character):

/user?name=******************

This redacted form could correspond to multiple original inputs, such as:

/user?name=John&surname=Smith

/user?name=JohnathansLongName

Without access to the hidden portion, it is impossible to determine the original URL from the redacted version. This ambiguity arises because the redaction process operates on raw byte ranges of the same length. As a result, only the number of redacted bytes is known, while the structure of the redacted path remains unknown.

Enforcing URL Integrity

To guard against URL redaction issues, WebProof provides two verification modes. They limit the way url can be redacted.

1. Full-URL verification

Use the verify function to check the integrity of the entire, unredacted URL. This mode resolves URL redaction issues by completely disallowing URL redaction. Example:

function main(WebProof calldata webProof) {

Web memory web = webProof.verify("example.com/user?name=John&surname=Smith")

...

}

2. URL prefix verification

Use the verifyWithUrlPrefix function to validate that the redacted URL starts with a known prefix. Example:

function main(WebProof calldata webProof) {

Web memory web = webProof.verifyWithUrlPrefix("example.com/user?name=")

...

}

- Assumes the prefix (“example.com/user?name=”) is correct.

- Treats everything after that prefix as untrusted (it can be redacted).

- Ensures that sensitive suffix data (e.g. user IDs) remains hidden, while protecting contract logic from tampering.

Details on how to prepare a WebProof with redacted URL can be found here.

Header Redaction

Both verify and verifyWithUrlPrefix functions handle header redactions in the same way, and no additional precautions are required to use them safely. Details on how to prepare a WebProof with redacted headers can be found here.

Email Significance

Many online services, from social media platforms to e-commerce sites, require an email address to create an account. According to recent surveys, more than 80% of businesses consider email to be their primary communication channel, both internally and with customers.

All of this means that our inboxes are full of data that can be leveraged.

Proof of Email

With vlayer, you can access email content from smart contracts and use it on-chain.

You do this by writing a Solidity smart contract (Prover) that has access to the parsed email and returns data to be used on-chain. This allows you to create claims without exposing the full content of an email.

Under the hood, we verify mail server signatures to ensure the authenticity and integrity of the content.

Email Safety Requirements

Not all emails that are considered valid by email servers will meet the validity requirements for vlayer. Email servers use various rules based on DMARC, DKIM, and SPF to determine if an email is valid. When creating an Email Proof, only DKIM (DomainKeys Identified Mail) signatures are used to prove the authenticity of an email. Therefore, the following additional preconditions must be met:

- The email must be signed with a DKIM-Signature header.

- The email must be sent from a domain that has a valid DKIM record.

- The email must have exactly one DKIM signature with a

dtag that matches the domain of theFromheader. - The email must have a signed

Fromheader containing a single email address.

If the email doesn't have a DKIM signature with matching signer and sender domains, it may indicate that the sender's email server is misconfigured. Emails from domains hosted on providers like Google Workspaces or Outlook often have a DKIM signature resembling the following:

From: Alice <alice.xyz.com>

DKIM-Signature: v=1; a=rsa-sha256; c=relaxed/relaxed;

d=xyz-com.***.gappssmtp.com; s=20230601; dara=google.com;

h=...;

bh=...;

b=...

Note that the d tag domain in this example is gappssmtp.com, which is a Google Workspaces domain. The From header domain is xyz.com. This email will not pass the DKIM validation and fail with the Error verifying DKIM: signature did not verify error.

Another potential issue is the use of subdomains.

For example, if the email is sent from [email protected] and the d tag in the DKIM signature is example.com, the email will not be considered valid.

Similarly, if the email is sent from [email protected] and the d tag is subdomain.example.com, the email will also be invalid.

DKIM validation will fail if the email body has been modified by a proxy server. The body hash included in the DKIM signature ensures the integrity of the email’s content. Any alteration to the body will invalidate the signature.

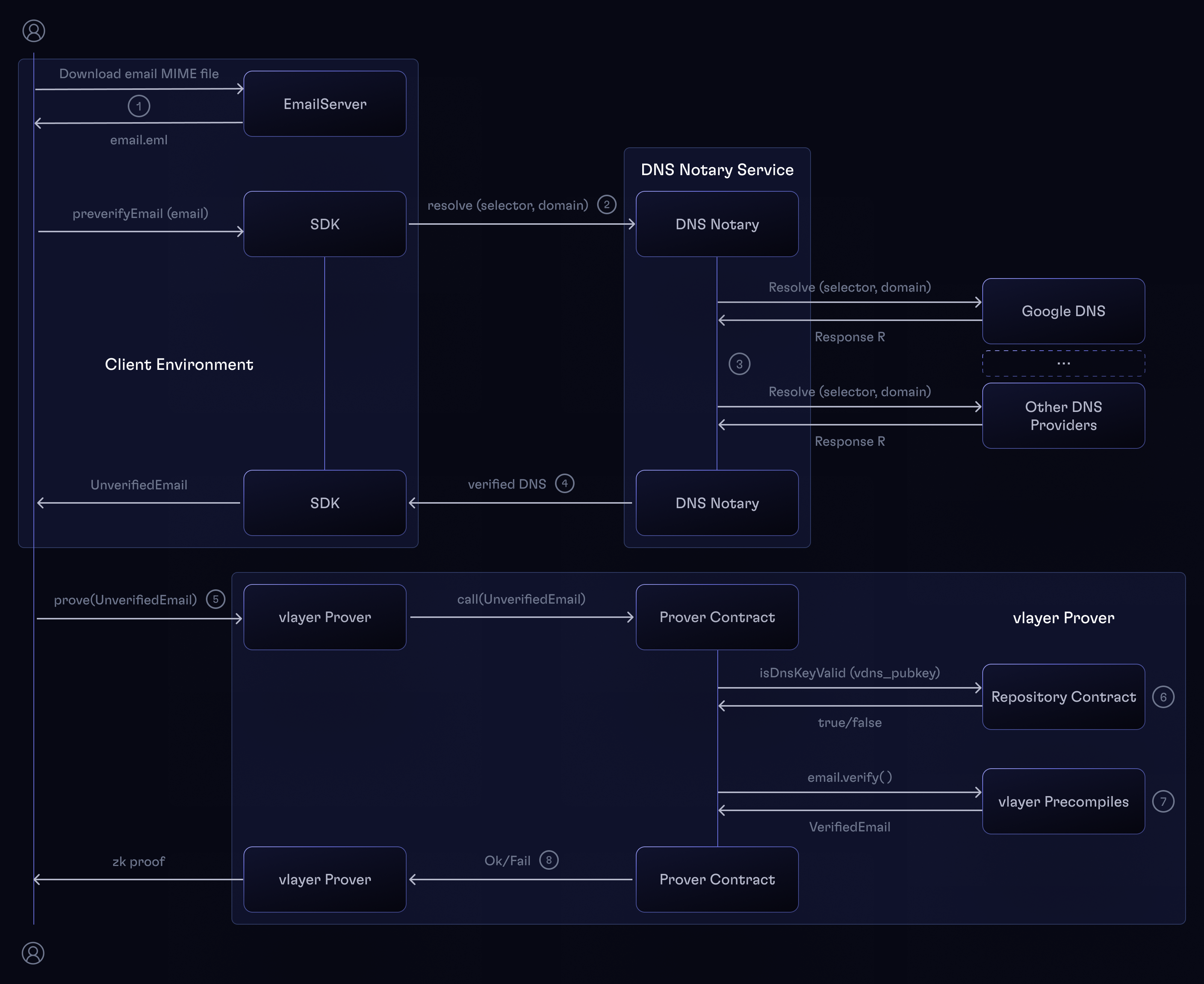

DKIM and DNS Notary

The simplified flow of the DKIM signature is:

-

The sender SMTP server has a private and public key pair.

-

The public key is published in DNS as a TXT record under

<selector>._domainkey.<domain>where:<selector>is a unique identifier unders=tag in the DKIM-Signature header_domainkeyis a fixed string<domain>is the sender's domain, stored in thed=tag in the DKIM-Signature header.

-

The email server adds a DKIM-Signature header to the email and sends it.

-

The recipient SMTP server receives the email.

-

SMTP server checks

DKIM-Signatureheader, reads d= tag ... stating its singers domain and selector, which gives him notion where to look for the key. -

The recipient server fetches the public key from DNS and verifies the signature.

The last step becomes tricky: we don't have the access to DNS from the Solidity level. Instead, we'll have to prove that the DNS record is indeed valid and pass it together with the email to the prover contract.

The DNS Notary (aka. Verifiable DNS) service exists for this reason: it uses the DNS Queries over HTTPS (DoH) protocol to fetch DNS records from several providers, signs them if they are valid and secure, and returns the signature together with the record.

Example

Let's say someone wants to prove they are part of a company or organization. One way to do this is to take a screenshot and send it to the verifier. However, this is not very reliable because screenshot images can be easily manipulated, and obviously such an image cannot be verified on-chain.

A better option is to prove that one can send email from their organization domain. Below is a sample Prover contract that verifies from which domain an email has been sent.

Below is an example of such proof generation:

import {Strings} from "@openzeppelin-contracts-5.0.1/utils/Strings.sol";

import {Proof} from "vlayer-0.1.0/Proof.sol";

import {Prover} from "vlayer-0.1.0/Prover.sol";

import {RegexLib} from "vlayer-0.1.0/Regex.sol";

import {VerifiedEmail, UnverifiedEmail, EmailProofLib} from "vlayer-0.1.0/EmailProof.sol";

contract EmailDomainProver is Prover {

using RegexLib for string;

using Strings for string;

using EmailProofLib for UnverifiedEmail;

function main(UnverifiedEmail calldata unverifiedEmail, address targetWallet)

public

view

returns (Proof memory, bytes32, address, string memory)

{

VerifiedEmail memory email = unverifiedEmail.verify();

require(email.subject.equal("Verify me for Email NFT"), "incorrect subject");

// Extract domain from email address

string[] memory captures = email.from.capture("^[^@]+@([^@]+)$");

require(captures.length == 2, "invalid email domain");

require(bytes(captures[1]).length > 0, "invalid email domain");

return (proof(), sha256(abi.encodePacked(email.from)), targetWallet, captures[1]);

}

}

It can be convenient to use Regular Expressions to validate the content of the email.

Email is passed to the Solidity contract as an UnverifiedEmail structure that can be created using the preverifyEmail function in the SDK.

preverifyEmail should be called with the raw .eml file content as an argument (learn how to get this file). The email is also required to have From and DKIM-Signature headers.

You can also use the preverifyEmail function inside the Solidity tests.

struct UnverifiedEmail {

string email;

string[] dnsRecords;

}

First, we verify the integrity of the email with the verify() function. Then we have a series of assertions (regular Solidity require()) that check the email details.

If one of the string comparisons fails,

requirewill revert the execution, and as a result, proof generation will fail.

💡 Try it Now

To run the above example on your computer, type the following command in your terminal:

vlayer init --template simple-email-proofThis command will download, create, and initialize a new project with sample email proof contracts.

Email structure

The email structure of type VerifiedEmail is a result of the UnverifiedEmail.verify() function.

Since the verify function actually verifies the passed email, VerifiedEmail's fields can be trusted from this point.

struct VerifiedEmail {

string from;

string to;

string subject;

string body;

}

A VerifiedEmail consists of the following fields:

from- a string consisting of the sender's email address (no name is available);to- a string consisting of the intended recipient's email address (no name is available);subject- a string with the subject of the email;body- a string consisting of the entire body of the email.

By inspecting and parsing the email payload elements, we can generate a claim to be used on-chain.

Note: If multiple headers share the same name (for example, two

From:lines), we always use the last one encountered when parsing or verifying headers (i.e. headers are processed in reverse order). See RFC 6376 §5.4.2 for details.

Getting .eml Files

Obtaining an .eml file can be helpful for development purposes, such as testing your own email proofs. Below are instructions for retrieving .eml files from common email clients.

Gmail

- Open the email you want to save.

- Click the three-dot menu in the top-right corner of the email.

- Select Download message.

Outlook / Thunderbird

- Open the email you want to save.

- Click on the File menu.

- Select "Save As".

Security Assumptions

Billions of users trust providers to deliver and store their emails. Inboxes often contain critical information, including work-related data, personal files, password recovery links, and more. Email providers also access customer emails for purposes like serving ads. Email proofs can only be as secure as the email itself, and the protocol relies on the trustworthiness of both sending and receiving servers.

Outgoing Server

The vlayer prover verifies that the message signature matches the public key listed in the DNS records. However, a dishonest outgoing server can forge emails and deceive the prover into generating valid proofs for them. To mitigate this risk, vlayer supports only a limited number of the world's most trusted email providers.

Preventing Unauthorized Actions

Both outgoing and incoming servers can read emails and use them to create proofs without the permission of the actual mail sender or receiver. This risk also extends to the prover, which accesses the email to generate claims. It is crucial for protocols to utilize email proofs in a manner that prevents the manipulation of smart contracts into performing unauthorized actions, such as sending funds to unintended recipients.

For example, it is advisable to include complete information in the email to ensure correct actions. Opt for emails like: "Send 1 ETH from address X to address Y on Ethereum Mainnet" over partial instructions, like: "Send 1 ETH," where other details come from another source, such as smart contract call parameters. Another approach is to use unique identifiers that unambiguously point to the necessary details.

From header format

We only accept a single local@domain address, checked as follows:

-

One mailbox: exactly one address, no groups/lists

-

Local(1–64 chars):- May include letters (A–Z, a–z), digits (0–9), and these symbols:

! # $ % & ' * + - / = ? ^ _ { | } ~ - Periods (

.) can appear between characters, but not at the very start or end, and never two in a row.

- May include letters (A–Z, a–z), digits (0–9), and these symbols:

-

Domain(1–255 chars):- Made of one or more labels separated by periods, e.g.

example.com→example+com - Each label can only use letters (

A–Z,a–z), digits (0–9), or hyphens (-) - A label may not begin or end with a hyphen, and labels can’t be empty (no

..)

- Made of one or more labels separated by periods, e.g.

Time travel

Access to historical data

Unfortunately, direct access to the historical state from within smart contracts is not possible. Smart contracts only have access to the current state of the current block.

To overcome this limitation, vlayer introduced the setBlock(uint blockNo) function, available in our Prover contracts. This function allows switching context of subsequent call to the desired block number.

This allows aggregating data from multiple blocks in a single call to a function.

Example

Prover

The following is an example of Prover code that calculates the average USDC balance at specific block numbers.

contract AverageBalance is Prover {

IERC20 immutable token;

uint256 immutable startingBlock;

uint256 immutable endingBlock;

uint256 immutable step;

constructor() {

token = IERC20(0xA0b86991c6218b36c1d19D4a2e9Eb0cE3606eB48); // USDC

startingBlock = 6600000;

endingBlock = 6700000;

step = 10000;

}

function averageBalanceOf(address _owner) public returns (Proof, address, uint256) {

uint256 totalBalance = 0;

uint256 iterations = 0;

for (uint256 blockNo = startingBlock; blockNo <= endingBlock; blockNo += step) {

setBlock(blockNo);

totalBalance += token.balanceOf(_owner); // USDC balance

iterations += 1;

}

return (proof(), _owner, totalBalance / iterations);

}

}

First call to the setBlock(blockNo) function sets the Prover context for the startingBlock (6600000 configured in the constructor). This means that the next call to the token.balanceOf function will read data in the context of the 6600000 block.

Next call to setBlock() sets the Prover context to block numbered 6610000 when step is configured to 10000. The subsequent call to token.balanceOf checks again total balance, but this time in block 6610000.

Each call to token.balanceOf can return different results if the account balance changes between blocks due to token transfers.

The for loop manages the balance checks, and the function’s final output is the average balance across multiple blocks.

Verifier

After proving is complete, the generated proof and public inputs can be used for on-chain verification.

contract AverageBalanceVerifier is Verifier {

address public prover;

mapping(address => bool) public claimed;

HodlerBadgeNFT public reward;

constructor(address _prover, HodlerBadgeNFT _nft) {

prover = _prover;

reward = _nft;

}

function claim(Proof calldata, address claimer, uint256 average)

public

onlyVerified(prover, AverageBalance.averageBalanceOf.selector)

{

require(!claimed[claimer], "Already claimed");

if (average >= 10_000_000) {

claimed[claimer] = true;

reward.mint(claimer);

}

}

}

In this Verifier contract, the claim function allows users to mint an NFT if their average balance is at least 10,000,000. The onlyVerified modifier ensures the correctness of the proof and the provided public inputs (claimer and average).

If the proof is invalid or the public inputs are incorrect, the transaction will revert.

💡 Try it Now

To run the above example on your computer, type the following command in your terminal:

vlayer init --template simple-time-travelThis command will download all the necessary artefacts into your current directory (which must be empty). Make sure you have Bun and Foundry installed on your system.

Teleport

Ethereum ecosystem of chains

The Ethereum ecosystem is fragmented, consisting of various EVM chains such as Base, Arbitrum, Optimism, and many more. Developing applications that interact with multiple chains used to be challenging, but Teleport makes it easy.

Teleporting betweens chains

setChain(uint chainId, uint blockNo) function, available in Prover contracts, allows to switch the context of execution to another chain (teleport). It takes two arguments:

chainId, which specifies the chain in the context of which the next function call will be executedblockNo, which is the block number of the given chain

Note: At the moment, teleportation is only supported from L1 Ethereum chains (Ethereum mainnet and Ethereum sepolia) to optimistic L2 chains. When we teleport from mainnet, we can only teleport to optimistic L2 mainnet chains, and from sepolia we can only teleport to optimistic L2 sepolia.

Example

Prover

The example below shows how to check USDC balances across three different chains. Following tokens are passed to the constructor:

Erc20Token[] memory tokens = [

Erc20Token(0xA0b86991c6218b36c1d19D4a2e9Eb0cE3606eB48, 1, 20683110), // mainnet

Erc20Token(0x833589fCD6eDb6E08f4c7C32D4f71b54bdA02913, 8453, 19367633), // base

Erc20Token(0x0b2C639c533813f4Aa9D7837CAf62653d097Ff85, 10, 124962954) // optimism

];

contract SimpleTeleportProver is Prover {

Erc20Token[] public tokens;

constructor(Erc20Token[] memory _tokens) {

for (uint256 i = 0; i < _tokens.length; i++) {

tokens.push(_tokens[i]);

}

}

function crossChainBalanceOf(address _owner) public returns (Proof memory, address, uint256) {

uint256 balance = 0;

for (uint256 i = 0; i < tokens.length; i++) {

setChain(tokens[i].chainId, tokens[i].blockNumber);

balance += IERC20(tokens[i].addr).balanceOf(_owner);

}

return (proof(), _owner, balance);

}

}

First, the call to setChain(1, 20683110) sets the chain to Ethereum mainnet (chainId = 1). Then, the ERC20 balanceOf function retrieves the USDC balance of _owner at block 20683110.

Next, setChain(8453, 19367633) switches the context to the Base chain. The balanceOf function then checks the balance at block 19367633, but this time on the Base chain.

Subsequent calls are handled by a for loop, which switches the context to the specified chains and block numbers accordingly.

Verifier

After proving is complete, the generated proof and public inputs can be used for on-chain verification.

contract SimpleTravel is Verifier {

address public prover;

mapping(address => bool) public claimed;

WhaleBadgeNFT public reward;

constructor(address _prover, WhaleBadgeNFT _nft) {

prover = _prover;

reward = _nft;

}

function claim(Proof calldata, address claimer, uint256 crossChainBalance)

public

onlyVerified(prover, SimpleTravelProver.crossChainBalanceOf.selector)

{

require(!claimed[claimer], "Already claimed");

if (crossChainBalance >= 10_000_000_000_00) { // 100 000 USD

claimed[claimer] = true;

reward.mint(claimer);

}

}

}

In this Verifier contract, the claim function lets users mint an NFT if their cross-chain USDC average balance is at least $100,000. The onlyVerified modifier ensures that the proof and public inputs (claimer and crossChainBalance) are correct.

If the proof or inputs are invalid, the transaction will revert, and the NFT will not be awarded.

💡 Try it Now

To run the above example on your computer, type the following command in your terminal:

vlayer init --template simple-teleportThis command will download all the necessary artefacts into your current directory (which must be empty). Make sure you have Bun and Foundry installed on your system.

Finality considerations

Finality, in the context of blockchains, is a point at which a transaction or block is fully confirmed and irreversible. When using vlayer setChain teleports, chain finality is an important factor to consider.

One should be aware that different chains may have different finality thresholds. For example, Ethereum Mainnet blocks are final after no more than about 12 minutes.

In the case of L2 chains, things are a bit more complicated. For example in case of optimistic rollup, like Optimism and Arbitrum, after L2 blocks are submitted to L1, there's a challenge period (often 7 days). If there is no evidence of an invalid state transition during this period, the L2 block is considered final.

Now consider teleporting to blocks that are not yet final in the destination chain. This can lead to situations where we are proving things that can be rolled back. It is important to include this risk in a protocol. The simplest way is to only teleport to blocks that are final and cannot be reorganized.

JSON Parsing and Regular Expressions

When dealing with Web Proofs, the ability to parse JSON data is essential. Similarly, finding specific strings or patterns in the subject or body of an email is crucial for Email Proofs.

To support these needs, we provide helpers for parsing text using regular expressions and extracting data from JSON directly within vlayer Prover contracts.

JSON Parsing

We provide four functions to extract data from JSON based on the field type:

jsonGetInt(json, path): Extracts an integer value and returnsint256;jsonGetBool(json, path): Extracts a boolean value and returnsbool;jsonGetString(json, path): Extracts a string value and returnsstring memory;jsonGetFloatAsInt(json, path, precision): Extracts a decimal number from JSON, moves its decimal point right by the specifiedprecision, and returns it as a truncatedint256. Ifprecisionis greater than the number of decimal digits, it pads it with zeros. For example, reading1.234at precision2yields123, and at precision4yields12340. This approach is used because Solidity does not support floating-point numbers.

import {Prover} from "vlayer/Prover.sol";

import {Web, WebLib} from "vlayer/WebProof.sol";

contract JSONContainsFieldProof is Prover {

using WebLib for Web;

function main(Web memory web) public returns (Proof memory, string memory) {

require(web.jsonGetInt("deep.nested.field") == 42, "deep nested field is not 42");

// If we return the provided JSON back, we will be able to pass it to verifier

// Together with a proof that it contains the field

return (proof(), web.body);

}

}

In the example above, the function extracts the value of the field deep.nested.field from the JSON string below and checks if it equals 42.

{

"deep": {

"nested": {

"field": 42

}

}

}

The functions will revert if the field does not exist or if the value is of the wrong type.

Jmespath

Field paths provided to jsonGet... functions are evaluated using JMESPath, a query language for JSON that allows powerful expressions beyond simple key access.

For example, to get the number of elements in an array:

int256 length = web.jsonGetInt("root.nested_level.field_array | length(@)");

require(length == 2, "Expected array of length 2");

To access a specific element from an array:

string memory value = web.jsonGetString("root.nested_level.field_array[1]");

require(keccak256(bytes(value)) == keccak256("val2"), "Unexpected array value");

You can also access fields within arrays of objects:

int256 value = web.jsonGetInt("root.nested_level.field_array_of_objects_with_numbers[1].key");

require(value == 2, "Expected value at index 1");

This makes it easy to work with complex JSON structures directly inside your prover logic, without needing preprocessing.

Regular Expressions

Regular expressions are a powerful tool for finding patterns in text.

We provide functions to match and capture a substring using regular expressions:

matcheschecks if a string matches a regular expression and returnstrueif a match is found;capturechecks if a string matched a regular expression and returns an array of strings. First string is the whole matched text, followed by the captures.

Regex size optimization

Internally, the regular expression is compiled into a DFA.

The size of the DFA is determined by the regular expression itself, and it can get quite large even for seemingly simple patterns.

It's important to remember that the DFA size corresponds to the cycles used in the ZK proof computation, and therefore it is important to keep it as small as possible.

We have a hard limit for a DFA size which should be enough for most use cases.

For example the regex "\w" includes all letters including the ones from unicode and as a result will be over 100x larger than a simple "[a-zA-Z0-9]" pattern.

In general, to bring the compiled regular expression size down, it is recommended to use more specific patterns.

import {Prover} from "vlayer/Prover.sol";

import {RegexLib} from "vlayer/Regex.sol";

contract RegexMatchProof is Prover {

using RegexLib for string;

function main(string calldata text, string calldata hello_world) public returns (Proof memory, string memory) {

// The regex pattern is passed as a string

require(text.matches("^[a-zA-Z0-9]*$"), "text must be alphanumeric only");

// Example for "hello world" string

string[] memory captures = hello_world.capture("^hello(,)? (world)$");

assertEq(captures.length, 3);

assertEq(captures[0], "hello world");

assertEq(captures[1], "");

assertEq(captures[2], "world");

// Return proof and provided text if it matches the pattern

return (proof(), text);

}

}

Vanilla JS/TS

JavaScript

A common way to interact with blockchain is to make calls and send transactions from JavaScript, most often from a web browser. vlayer provides developer friendly JavaScript/TypeScript API - vlayer SDK. It combines well with the standard way of interacting with smart contracts.

Installation

To install vlayer SDK, run the following command in your JavaScript application

yarn add @vlayer/sdk

vlayer client

The vlayer client provides an interface for interacting with the vlayer JSON-RPC API. It enables you to trigger and monitor proof statuses while offering convenient access to features such as Web Proofs and Email Proofs.

Initializing

You can initialize a client as shown below:

import { createVlayerClient } from "@vlayer/sdk";

const vlayer = createVlayerClient();

Proving

In order to start proving, we will need to provide:

address- an address of prover contractproverAbi- abi of prover contractfunctionName- name of prover contract function to callargs- an array of arguments tofunctionNameprover contract functionchainId- id of the chain in whose context the prover contract call shall be executed

const hash = await vlayer.prove({

address: '0x70997970c51812dc3a010c7d01b50e0d17dc79c8',

proverAbi: proverSpec.abi,

functionName: 'main',

args: ['0xd8dA6BF26964aF9D7eEd9e03E53415D37aA96045', 123],

chainId: chain.id,

});

Waiting for result

Wait for the proving to be finished, and then retrieve the result along with Proof.

const result = await vlayer.waitForProvingResult({ hash });

By default, the waitForProvingResult function polls the server for a proof for 15 minutes. This is achieved through 900 retries with a polling interval of 1 second.

You can customize this behavior by specifying the following optional parameters:

numberOfRetries: The total number of polling attempts.sleepDuration: The delay (in ms) between each polling attempt. For example, if you want to extend the polling duration to 180 seconds with a 2-second delay between attempts, you can configure it as follows:

const provingResult = await vlayer.waitForProvingResult({

numberOfRetries: 90, // Total retries (180s / 2)

sleepDuration: 2000, // 2s interval between retries

});

On-Chain verification

Once the proving result is obtained, one may call the verifier contract to validate the proof. Below is an example using the viem library's writeContract function:

// Create client, see docs here: https://viem.sh/docs/clients/wallet

// const client = createWalletClient({...});

const txHash = await client.writeContract({

address: verifierAddr,

abi: verifierSpec.abi,

functionName: "verify",

args: provingResult,

chain,

account,

});

React Hooks for vlayer

@vlayer/react is a library of React hooks for interacting with the vlayer.

These hooks provide functions that help manage state and side effects in React components, aligning with React's functional programming paradigm and style of wagmi hooks.

Prerequisites

The following libraries are required to use @vlayer/react:

- React: A library for building user interfaces.

- Wagmi: A library of React hooks for Ethereum.

- TanStack Query: A library for efficient data fetching and caching.

Add them to your project if they are not already present:

yarn add react react-dom wagmi @tanstack/react-query

Installation

Install the @vlayer/react library using preferred package manager:

yarn add @vlayer/react

Context Providers

Wrap the application with the required React Context Providers and configure the desired connectors and chains to enable @vlayer/react hooks.

import { WagmiProvider, http, createConfig } from "wagmi";

import { baseSepolia, sepolia,optimismSepolia, foundry } from "wagmi/chains";

import { metaMask } from "wagmi/connectors";

import { QueryClient, QueryClientProvider } from "@tanstack/react-query";

import { ProofProvider } from "@vlayer/react";

const wagmiConfig = createConfig({

chains: [baseSepolia, sepolia, optimismSepolia, foundry],

connectors: [metaMask()],

transports: {

[baseSepolia.id]: http(),

[sepolia.id]: http(),

[optimismSepolia.id]: http(),

[foundry.id]: http(),

},

});

const queryClient = new QueryClient();

function App() {

return (

<WagmiProvider config={wagmiConfig}>

<QueryClientProvider client={queryClient}>

<ProofProvider>

{/* Application components go here */}

</ProofProvider>

</QueryClientProvider>

</WagmiProvider>

);

}

export default App;

Context providers facilitate the sharing of application state (e.g., connected wallet, selected chain) across components. Once the setup is complete, components wrapped within the WagmiProvider, QueryClientProvider, and ProofProvider can use the vlayer hooks.

Your section on configuring ProofProvider is well-structured and clear. Here are some suggestions to improve grammar, style, and clarity while maintaining the current structure:

Configuring ProofProvider

The ProofProvider component in vlayer is pre-configured for the testnet environment by default, requiring no additional props for basic usage:

<ProofProvider>

{/* Application components go here */}

</ProofProvider>

Using the config Prop

The ProofProvider also accepts an optional config prop, enabling you to select the desired env. Based on the chosen environment, the provider is automatically configured with the default and pre-configured URLs necessary to access vlayer network services:

<ProofProvider

config={{

env: "dev|testnet|prod", // Specify the environment

}}

>

{/* Application components go here */}

</ProofProvider>

Customizing Service URLs

In addition to selecting an environment, the config prop allows you to specify custom URLs for vlayer network services. These include services like proverUrl, notaryUrl, and wsProxyUrl:

<ProofProvider

config={{

proverUrl: "https://stable-fake-prover.vlayer.xyz",

notaryUrl: "https://test-notary.vlayer.xyz",

wsProxyUrl: "wss://test-wsproxy.vlayer.xyz",

}}

>

{/* Application components go here */}

</ProofProvider>

useCallProver

The useCallProver hook is used to interact with the vlayer prover by initiating a proving process with specified inputs.

Example usage

The callProver function initiates the proving process. Proving is an asynchronous operation, and the result (data) contains a hash that can be used to track the status or retrieve the final proof.

import { useCallProver } from "@vlayer/react";

const ExampleComponent = () => {

const {

callProver,

data,

status,

error,

isIdle,

isPending,

isReady,

isError

} = useCallProver({

address: proverAddress, // Address of the prover contract

proverAbi: proverSpec.abi, // ABI of the prover

functionName: "main", // Function to invoke in the prover

});

return (

<button onClick={() => callProver([...args])}>

Prove

</button>

);

}

The callProver function has to be invoked with the required arguments by the prover contract function.

Besides proof hash, the hook returns variables to monitor the request and update the UI:

status: Overall status of the initial call to the prover (idle,pending,ready, orerror).isIdle: Indicates that no prover call has been initiated.isPending: Indicates the waiting for proving hash is ongoing.isReady: Indicates the proving hash is available.isError: Indicates an error occurred.error: Contains the error message if an error occurred.

useWaitForProvingResult

The useWaitForProvingResult hook waits for a proving process to complete and retrieves the resulting proof.

Example usage

Pass the proof hash to the hook to monitor the proving process and retrieve the proof (data) when it is ready. If no hash (null) is provided, no request is sent to the prover.

Proof computation is an asynchronous operation, and depending on the complexity of the proof, it may take a few seconds to complete. Proof is null until the computation is complete.

import { useWaitForProvingResult, useCallProver } from "@vlayer/react";

const ExampleComponent = () => {

const { callProver, data: proofHash } = useCallProver({

address: proverAddress, // Address of the prover contract

proverAbi: proverSpec.abi, // ABI of the prover

functionName: "main", // Function to invoke in the prover

});

const {

data,

error,

status,

isIdle,

isPending,

isReady,

isError

} = useWaitForProvingResult(proofHash);

return (

<button onClick={() => callProver([...args])}>

Prove

</button>

);

}

The hook provides additional properties for tracking progress and managing UI updates:

status: Indicates the status of the proving result (idle,pending,ready, orerror).isIdle: Indicates the hook is not triggered.isPending: Indicates the proof computation is ongoing.isReady: Indicates the final proof is available.isError: Indicates an error occurred during proving.error: Contains the error message returned by the prover

💡 Try it Now

To see vlayer React hooks in action, run the following command in your terminal:

vlayer init --template simple-email-proofThis command will download create a new project. Check out the

vlayer/src/components/EmlForm.tsxfile to see how vlayer React hooks are used.

Web proofs from javascript

Web Proofs

On top of access to vlayer JSON-RPC proving API, vlayer client provides functionality to generate and prove Web Proofs.

vlayer browser extension

vlayer provides a browser extension which can be launched (once installed in user's browser) from vlayer SDK and used to generate a Web Proof of a 3rd party website.

vlayer extension is compatible with Chrome and Brave browsers.

We start by instantiating vlayer client.

import { createVlayerClient } from '@vlayer/sdk'

const vlayer = createVlayerClient()

Next, we can define how the vlayer extension should generate the Web Proof. We do this in a declarative way, by specifying the steps the extension should guide the user through.

import {

createWebProofRequest,

startPage,

expectUrl,

notarize,

} from '@vlayer/sdk/web_proof'

const webProofRequest = createWebProofRequest({

steps: [

startPage('https://x.com/i/flow/login', 'Go to x.com login page'),

expectUrl('https://x.com/home', 'Log in'),

notarize('https://api.x.com/1.1/account/settings.json', 'GET', 'Generate Proof of Twitter profile'),

],

})

The above snippet defines a Web Proof, which is generated by the following steps:

startPage- redirects the user's browser tohttps://x.com/i/flow/login.expectUrl- ensures that the user is logged in and visitinghttps://x.com/homeURL. The argument passed here is a URL Pattern against which the user browser URL is checked.notarize- prompts the user to generate a Web Proof, i.e. to notarize an HTTPGETrequest sent tohttps://api.x.com/1.1/account/settings.jsonURL. This step works by first capturing the request made by the user's browser to the given URL (which is a URL Pattern against which browser request URLs are matched) and, once captured, the request is sent again, this time notarized.

Each step also accepts a human-readable message which the user will see. We can also optionally pass a link to custom logo to display in the extension.

Once we have a definition of how a Web Proof should be generated we can request it.

import { proverAbi } from './proverAbi'

import { sepolia } from 'viem/chains'

const hash = await vlayer.proveWeb({

address: '0x70997970c51812dc3a010c7d01b50e0d17dc79c8',

proverAbi,

functionName: 'main',

args: [webProofRequest, '0xd8dA6BF26964aF9D7eEd9e03E53415D37aA96045'],

chainId: sepolia,

})

The above snippet:

- Opens vlayer browser extension and guides the user through the steps defined above. The Web Proof is generated using vlayer default Notary server and WebSocket proxy (see section WebSocket proxy below for more details).

- Once the Web Proof is successfully generated, it is submitted to Prover contract:

- with address

0x70997970c51812dc3a010c7d01b50e0d17dc79c8, - whose interface is defined by

proverAbi, - calling

functionNamefunction of the contract, - passing the specified

args- the generated Web Proof will be passed as first argument in this example, - executing the method call on prover contract within the context of

chainId.

- with address

To learn more details about the Web Proof feature, please see the Web Proof section.

Low-level API

While the vlayer client method proveWeb described above provides a convenient interface to both the vlayer browser extension and the prover contract, we also provide methods that can access each of them separately.

We can configure a Web Proof provider which uses vlayer browser extension and enables configuring custom Notary server and custom WebSocket proxy (see section WebSocket proxy below for more details).

import { createExtensionWebProofProvider } from '@vlayer/sdk/web_proof'

const webProofProvider = createExtensionWebProofProvider({

notaryUrl: 'https://...',

wsProxyUrl: 'wss://...',

})

Both notaryUrl and wsProxyUrl have default values:

notaryUrl:https://test-notary.vlayer.xyzwsProxyUrl:wss://test-wsproxy.vlayer.xyz

Because of these defaults, the provider can be initialized without any additional configuration as follows:

const webProofProvider = createExtensionWebProofProvider();

vlayer hosts a public instance of the TLSN notary server for development, experimentation, and demonstration purposes. Notary server can be also self-hosted using Docker.

In the future, vlayer is planning to provide additional Web Proof provider implementations, which can be e.g. ran server-side and don't require vlayer browser extension for the purpose of Web Proof generation.

The Web Proof provider exposes a low-level API to directly define proverCallCommitment (commitment to use the generated Web Proof only with the specified prover contract call details, so it's not possible to submit it in a different context) and to explicitly generate the Web Proof by calling requestWebProof.

import {

startPage,

expectUrl,

notarize,

} from '@vlayer/sdk/web_proof'

// all args required by prover contract function except webProof itself

const commitmentArgs = ['0xd8dA6BF26964aF9D7eEd9e03E53415D37aA96045']

const proverCallCommitment = {

address: '0x70997970c51812dc3a010c7d01b50e0d17dc79c8',

functionName: 'main',

commitmentArgs,

chainId: sepolia,

proverAbi,

}

webProofProvider.requestWebProof({

proverCallCommitment,

steps: [

startPage('https://x.com/i/flow/login', 'Go to x.com login page'),

expectUrl('https://x.com/home', 'Log in'),

notarize('https://api.x.com/1.1/account/settings.json', 'GET', 'Generate Proof of Twitter profile', []),

],

});