Call Prover architecture

vlayer enables three key functionalities: accessing different sources of verifiable data, aggregating this data in a verifiable way to obtain verifiable result and using the verifiable result on-chain.

It supports accessing verifiable data from three distinct sources: HTTP requests, emails and EVM state and storage. For each source, a proof of validity can be generated:

- HTTP requests can be verified using a Web Proof, which includes information about the TLS session, a transcript of the HTTP request and response signed by a TLS Notary

- Email contents can be proven by verifying DKIM signatures and checking the sender domain

- EVM state and storage proofs can be verified against the block hash via Merkle Proofs

Before vlayer, ZK programs were application-specific and proved a single source of data. vlayer allows you to write a Solidity smart contract (called Prover) that acts as a glue between all three possible data sources and enables you to aggregate this data in a verifiable way - we not only prove that the data we use is valid but also that it was processed correctly by the Prover.

Aggregation examples

- Prover computing average ERC20 balance of addresses

- Prover returning true if someone starred a GitHub Org by verifying a Web Proof

Note: Despite being named "Prover", the Prover contract does not compute the proof itself. Instead, it is executed inside the zkEVM, which produces the proof of the correctness of its execution.

Call Proof is a proof that we correctly executed the Prover smart contract and got the given result.

It can be later verified by a deployed Verifier contract to use the verifiable result on-chain.

But how are Call Proofs obtained?

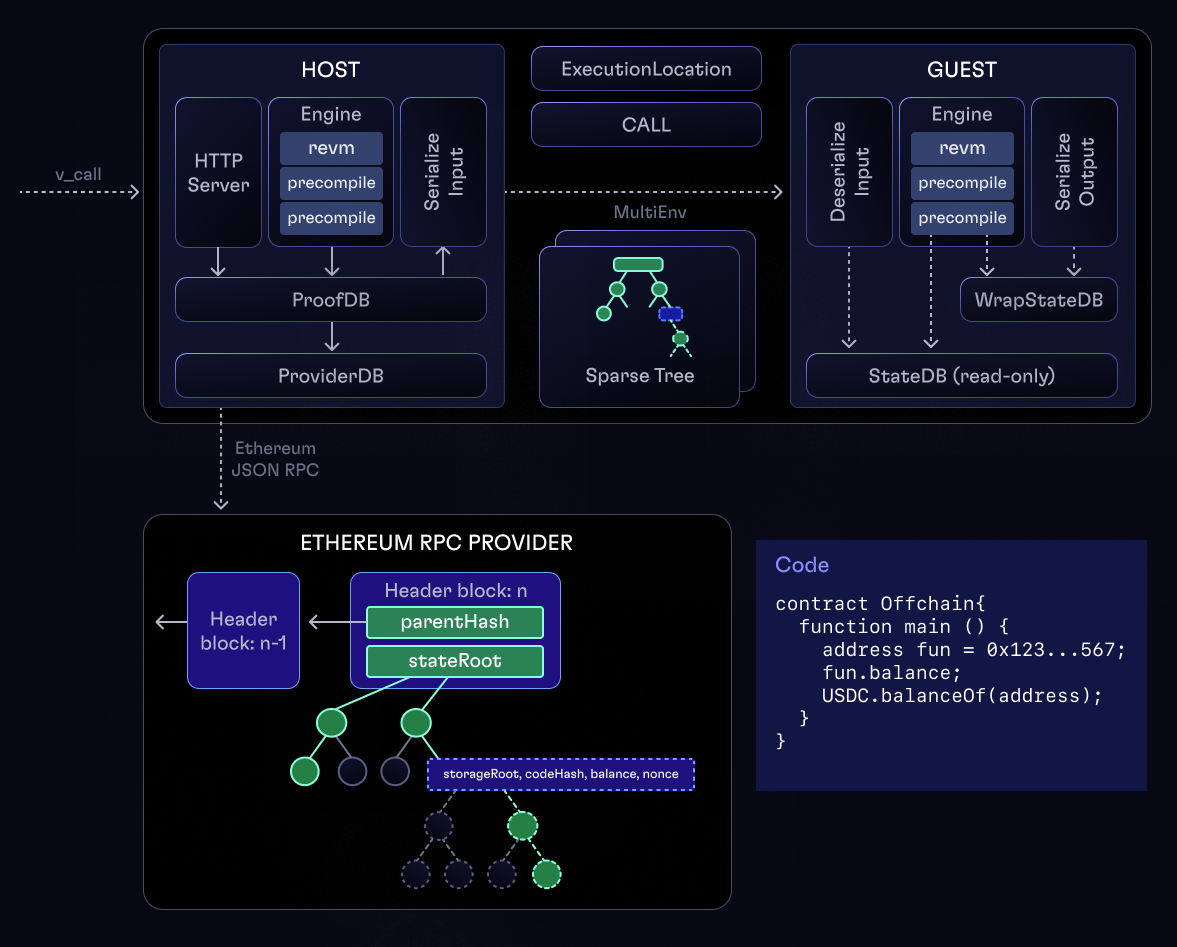

Call Prover

To obtain Call Proofs, the Call Prover is used. It is a Rust server that exposes the v_call JSON-RPC endpoint. The three key components of the prover are the Guest, Host, and Engine. The Guest executes code within the zkEVM to generate a proof of execution. The Host prepares the necessary data and sends it to the Guest. The Engine, responsible for executing the EVM, runs in both the Guest and Host environments.

Their structure and responsibilities are as follows:

- Guest: Performs execution of the code inside zkEVM. Consists of three crates:

guest(inservices/call/guest): Library that contains code for EVM execution and input validation.risc0_guest(inguest_wrapper/risc0_call_guest): Thin wrapper that uses RISC0 ZKVM I/O and delegates work toguest.guest_wrapper(inguest_wrapper): Compilesrisc0_guest(using cargo build scripts) to a binary format (ELF) using RISC Zero target.

- Host (in

services/call/host): Runs a preflight, during which it collects all the data required by the guest. It retrieves data from online sources (RPC clients) and then triggers guest execution (which is done offline). - Engine (in

services/call/engine): Sets up and executes the EVM, which executesProversmart contract (including calling custom Precompiles). It executes exactly the same code in preflight and Guest execution.

Our architecture is heavily inspired by RISC Zero steel.

Currently, the Guest is compiled with Risc0, but we aim to build vendor-lock free solutions working on multiple zk stacks, like sp-1 or Jolt.

Runtime configuration

Call Prover can be configured either from a config.toml file, or via environment variables.

TOML

When configuring the prover from the config file, you can pass it via --config-file flag:

$ call_server --config-file config.toml

Valid keys for different configuration options are shown in an example TOML file below:

host = "127.0.0.1"

port = 3000

proof_mode = "fake"

log_format = "plain" # Optional log format to use: [plain, json], defaults to plain

# Optional list of RPC urls for different chains.

# If empty, defaults to Anvil: 31337:http://localhost:8545

[[rpc_urls]]

chain_id = 31337

url = "http://localhost:8545"

[[rpc_urls]]

chain_id = 31338

url = "http://localhost:8546"

# Optional chain client config

[chain_client]

url = "http://localhost:3001"

poll_interval = 5 # Optional polling interval in seconds

timeout = 240 # Optional timeout in seconds

# Optional JWT-based authentication config.

[auth.jwt]

public_key = "/path/to/signing/key.pem"

algorithm = "rs256" # Signing algorithm to use, defaults to rs256

# Optional list of user-defined JWT claims

[[auth.jwt.claims]]

name = "sub" # Specifying just the name of the claim will assert the claims existence with any value

[[auth.jwt.claims]]

name = "environment"

values = ["test"] # `environment` can only accept a single value: `test`

# Optional gas-meter integration for billing and usage tracking

[gas_meter]

url = "http://localhost:3002"

api_key = "deadbeef"

time_to_live = 3600 # Optional time-to-live for gas meter requests in seconds

A few comments about different configuration options:

host- address to useport- port to bind toproof_mode- possible values are eitherfake(default) for fake proofs, orgroth16for production proofs on Risc0 Bonsailog_format- optional log format to use eitherplain(default) orjsonrpc_urls- optional list of chain RPC urls for the prover to use, defaults to Anvil if not set (31337:http://localhost:8545)chain_client- optional chain client config (usually used with time-travel and teleport)auth- optional auth module which currently supports only JWT modeauth.jwt- optional JWT auth configgas_meter- optional gas meter config (usually used internally for billing and usage tracking)

Environment variables

If using a config.toml is undesirable, the prover can also be configured using defaults with environment variables overrides. Every config option can be overridden.

We use VLAYER_ prefix, and nesting of config options is represented using __. Current up-to-date table of environment variables overrides is as follows:

| Environment variable | Config option | Default | Type | Values |

|---|---|---|---|---|

VLAYER_HOST | host | "127.0.0.1" | string | |

VLAYER_PORT | port | 3000 | u16 | 0-65535 |

VLAYER_PROOF_MODE | proof_mode | "fake" | enum | "fake","groth16" |

VLAYER_LOG_FORMAT | log_format | "plain" | enum | "plain","json" |

VLAYER_RPC_URLS | rpc_urls | "31337:http://localhost:8545" | list | |

VLAYER_CHAIN_CLIENT__URL | chain_client.url | "http://localhost:3001" | string | |

VLAYER_CHAIN_CLIENT__POLL_INTERVAL | chain_client.poll_interval | 5 | usize | |

VLAYER_CHAIN_CLIENT__TIMEOUT | chain_client.timeout | 240 | usize | |

VLAYER_AUTH__JWT__PUBLIC_KEY | auth.jwt.public_key | "/path/to/signing/key.pem" | string | |

VLAYER_AUTH__JWT__ALGORITHM | auth.jwt.algorithm | "rs256" | enum | "rs256","rs384","rs512" |

| "es256","es384","ps256" | ||||

| "ps384","ps512","eddsa" | ||||

VLAYER_AUTH__JWT__CLAIMS | auth.jwt.claims | [] | list | |

VLAYER_GAS_METER__URL | gas_meter.url | "http://localhost:3002" | string | |

VLAYER_GAS_METER__API_KEY | gas_meter.api_key | "deadbeef" | string | |

VLAYER_GAS_METER__TIME_TO_LIVE | gas_meter.time_to_live | 3600 | usize |

Execution and proving

The Host passes arguments to the Guest via standard input (stdin), and similarly, the Guest returns values via standard output (stdout). zkVM works in isolation, without access to a disk or network.

On the other hand, when executing Solidity code in the Guest, it needs access to the Ethereum state and storage. The state consist of Ethereum accounts (i.e. balances, contracts code and nonces) and the storage consist of smart contract variables. Hence, all the state and storage needs to be passed via input.

However, all input should be considered insecure. Therefore, validity of all the state and storage needs to be proven.

Note: In off-chain execution, the notion of the current block doesn't exist, hence we always access Ethereum at a specific historical block. The block number doesn't have to be the latest mined block available on the network. This is different than the current block inside on-chain execution, which can access the state at the moment of execution of the given transaction.

To deliver all necessary proofs, the following steps are performed:

- In preflight, we execute Solidity code on the host. Each time the database is called, the value is fetched via Ethereum JSON RPC and the proof is stored in it. This database is called

ProofDb - Serialized content of

ProofDbis passed via stdin to theguest guestdeserializes content into aStateDb- Validity of the data gathered during the preflight is verified in

guest - Solidity code is executed inside revm using

StateDb

Since that Solidity execution is deterministic, database in the guest has exactly the data it requires.

Databases

revm requires us to provide a DB which implements DatabaseRef trait (i.e. can be asked about accounts, storage, block hashes).

It's a common pattern to compose databases to orthogonalize the implementation.

We have Host and Guest databases

- Host - runs

CacheDB<ProofDb<ProviderDb>>:ProviderDb- queries Ethereum RPC Provider (i.e. Alchemy, Infura, Anvil);ProofDb- records all queries aggregates them and collects EIP1186 (eth_getProof) proofs;CacheDB- stores trusted seed data to minimize the number of RPC requests. We seed caller account and some Optimism system accounts.

- Guest - runs

CacheDB<WrapStateDb<StateDb>>:StateDbconsists of state passed from the host and has only the content required to be used by deterministic execution of the Solidity code in the guest. Data in theStateDbis stored as sparse Merkle Patricia Tries, hence access to accounts and storage serves as verification of state and storage proofs;WrapStateDbis an adapter forStateDbthat implementsDatabasetrait. It additionally does caching of the accounts, for querying storage, so that the account is only fetched once for multiple storage queries;CacheDB- has the same seed data as it's Host version.

EvmEnv and EvmInput

vlayer enables aggregating data from multiple blocks and multiple chains. We call these features Time Travel and Teleport. To achieve that, we span multiple revm instances during Engine execution. Each revm instance corresponds to a certain block number on a certain chain.

EvmEnv struct represents a configuration required to create a revm instance. Depending on the context, it might be instantiated with ProofDB (Host) or WrapStateDB (Guest).

It is also implicitly parameterized via dynamic dispatch by the Header type, which may differ for various hard forks or networks.

#![allow(unused)] fn main() { pub struct EvmEnv<DB> { pub db: DB, pub header: Box<dyn EvmBlockHeader>, ... } }

The serializable input we pass between host and guest is called EvmInput. EvmEnv can be obtained from it.

#![allow(unused)] fn main() { pub struct EvmInput { pub header: Box<dyn EvmBlockHeader>, pub state_trie: MerkleTrie, pub storage_tries: Vec<MerkleTrie>, pub contracts: Vec<Bytes>, pub ancestors: Vec<Box<dyn EvmBlockHeader>>, } }

Because we may have multiple blocks and chains, we also have structs MultiEvmInput and MultiEvmEnv, mapping ExecutionLocations to EvmInputs or EvmEnvs equivalently.

#![allow(unused)] fn main() { pub struct ExecutionLocation { pub chain_id: ChainId, pub block_tag: BlockTag, } }

CachedEvmEnv

EvmEnv instances are accessed in both Host and Guest using CachedEvmEnv structure. However, the way CachedEvmEnv is constructed differs between these two contexts.

Structure

#![allow(unused)] fn main() { pub struct CachedEvmEnv<D: RevmDB> { cache: MultiEvmEnv<D>, factory: Mutex<Box<dyn EvmEnvFactory<D>>>, } }

- cache:

HashMap(aliased asMultiEvmEnv<D>) that storesEvmEnvinstances, keyed by theirExecutionLocation - factory: used to create new

EvmEnvinstances

On Host

On Host, CachedEvmEnv is created using from_factory function. It initializes CachedEvmEnv with an empty cache and a factory of type HostEvmEnvFactory that is responsible for creating EvmEnv instances.

#![allow(unused)] fn main() { pub(crate) struct HostEvmEnvFactory { providers: CachedMultiProvider, } }

HostEvmEnvFactory uses a CachedMultiProvider to fetch necessary data (such as block headers) and create new EvmEnv instances on demand.

On Guest

On Guest, CachedEvmEnv is created using from_envs. This function takes a pre-populated cache of EvmEnv instances (created on Host) and initializes the factory field with NullEvmEnvFactory.

NullEvmEnvFactory is a dummy implementation that returns an error when its create method is called. This is acceptable because, in Guest context, there is no need to create new environments — only the cached ones are used.

Verifying data

Guest is required to verify all data provided by the Host. Initial validation of its coherence is done in two places:

-

multi_evm_input.assert_coherencyverifies:- Equality of subsequent

ancestorblock hashes - Equality of

header.state_rootand actualstate_root

- Equality of subsequent

-

When we create

StateDbin Guest withStateDb::new, we compute hashes forstorage_triesroots andcontractscode. When we later try to access storage (using theWrapStateDb::basic_reffunction) or contract code (using theWrapStateDb::code_by_hash_reffunction), we know this data is valid because the hashes were computed properly. If they weren't, we wouldn't be able to access the given storage or code. Thus, storage verification is done indirectly.

Above verifications are not enough to ensure validity of Time Travel (achieved by Chain Proofs) and Teleport. Travel call verification is described in the next section.

Precompiles

As shown in the diagram in the Execution and Proving section, the Engine executes the EVM, which in turn runs the Solidity Prover smart contract. During execution, the contract may call custom precompiles available within the vlayer zkEVM, enabling various advanced features.

The list, configuration, and addresses of these precompiles are defined in services/call/precompiles. These precompiles can be easily accessed within Solidity Prover contracts using libraries included in the vlayer Solidity smart contracts package.

Available precompiles and their functionality

-

WebProof.verify(viaWebProofLib):

Verifies aWebProofand returns aWebobject containing:body(HTTP response body)notaryPubKey(TLS Notary’s public key that signed the Web Proof)url(URL of the HTTP request)

See Web Proof for details.

-

Web.jsonGetString,Web.jsonGetInt,Web.jsonGetBool,Web.jsonGetFloatAsInt(viaWebLib):

Parses JSON from an HTTP response body (Web.body).

See JSON Parsing for more information. -

UnverifiedEmail.verify(viaEmailProofLib):

Verifies anUnverifiedEmailand returns aVerifiedEmailobject containing:from(sender's email address)to(recipient's email address)subject(email subject)body(email body)

See Email Proof.

-

string.capture,string.match(viaRegexLib):

Performs regex operations on strings.See Regular Expressions.

-

string.test(viaURLPatternLib):

Used withinWebProof.verifyto validateWeb.urlagainst a given URL pattern.See Web Proof.

Error handling

Error handling is done via HostError enum type, which is converted into http code and a human-readable string by the server.

Instead of returning a result, to handle errors, Guest panics. It does need to panic with a human-readable error, which should be converted on Host to a semantic HostError type. As execution on Guest is deterministic and should never fail after a successful preflight, the panic message should be informative for developers.